可能是史上最昂貴的法拉利車禍

前天發生在日本的史上最昂貴法拉利車禍看得我真心疼。這些火紅色的馬兒撞得像廢鐵一般慘烈。

這些全是我最喜歡的八九十年代法拉利名駒﹐而且大多數都改裝過的﹐尤其是那台白色的Testarossa﹐太可惜了。

最後還有一段視頻新聞報道﹕

前天發生在日本的史上最昂貴法拉利車禍看得我真心疼。這些火紅色的馬兒撞得像廢鐵一般慘烈。

這些全是我最喜歡的八九十年代法拉利名駒﹐而且大多數都改裝過的﹐尤其是那台白色的Testarossa﹐太可惜了。

最後還有一段視頻新聞報道﹕

It seemed to me that Dell is already using e-MLC SSD (enterprise MLC) on its latest Poweredge G11 series servers.

100GB Solid State Drive SATA Value MLC 3G 2.5in HotPlug Drive,3.5in HYB CARR-Limited Warranty Only [USD $1,007]

200GB Solid State Drive SATA Value MLC 3G 2.5in HotPlug Drive,3.5in HYB CARR-Limited Warranty Only [USD $1,807]

I think Poweredge R720 will be probably released in the end of March. I don’t see the point using more cores as CPU is always the last resources being running out, but RAM in fact is the number one most important thing, so having more cores or faster Ghz almost means nothing to most of the ESX admin. Hum…also if VMWare can allow ESX 5.0 Enterprise Plus to have more than 256G per processor then it’s a real change.

Michael Dell dreams about future PowerEdge R720 servers at OpenWorld

Dell, the man, said that Dell, the company, would launch its 12th generation of PowerEdge servers during the first quarter, as soon as Intel gets its “Sandy Bridge-EP” Xeon E5 processors out the door. Dell wasn’t giving away a lot when he said that future Intel chips would have lots of bandwidth. as readers of El Reg know from way back in may, when we divulged the feeds and speeds of the Xeon E5 processors and their related “Patsburg” C600 chipset, that bandwidth is due to the integrated PCI-Express 3.0 peripheral controllers, LAN-on-motherboard adapters running at 10 Gigabit Ethernet speeds, and up to 24 memory slots in a two-socket configuration supporting up to 384GB using 16GB DDR3 memory sticks running at up to 1.6GHz.

But according to Dell, that’s not the end of it. he said that Dell would be integrating “tier 0 storage right into the server,” which is server speak for front-ending external storage arrays with flash storage that is located in the server and making them work together seamlessly. “You can’t get any closer to the CPU,” Dell said.

Former storage partner and rival EMC would no doubt agree, since it was showing off the beta of its own “Project Lightning” server flash cache yesterday at OpenWorld. The idea, which has no doubt occurred to Dell, too, is to put flash cache inside of servers but put it under control of external disk arrays. this way, the disk arrays, which are smart about data access, can push frequently used data into the server flash cache and not require the operating system or databases to be tweaked to support the cache. It makes cache look like disk, but it is on the other side of the wire and inside the server.

Dell said that the new PowerEdge 12G systems, presumably with EqualLogic external storage, would be able to process Oracle database queries 60 times faster than earlier PowerEdge 11G models.

The other secret sauce that Dell is going to bring to bear to boost Oracle database processing, hinted Dell, was the system clustering technologies it got by buying RNA Networks back in June.

RNA Networks was founded in 2006 by Ranjit Pandit and Jason Gross, who led the database clustering project at SilverStorm Technologies (which was eaten by QLogic) and who also worked on the InfiniBand interconnect and the Pentium 4 chip while at Intel. The company gathered up $14m in venture funding and came out of stealth in February 2009 with a shared global memory networking product called RNAMessenger that links multiple server nodes together deeper down in the iron than Oracle RAC clusters do, but not as deep as the NUMA and SMP clustering done by server chipsets.

Dell said that a rack of these new PowerEdge systems – the picture above shows a PowerEdge R720, which would be a two-socket rack server using the eight-core Xeon E5 processors – would have 1,024 cores (that would be 64 servers in a 42U rack). 40TB of main memory (that’s 640GB per server), over 40TB of flash, and would do queries 60 times faster than a rack of PowerEdge 11G servers available today. presumably these machines also have EqualLogic external storage taking control of the integrated tier 0 flash in the PowerEdge 12G servers.

Update: March 6, 2012

Got some update from The Register regarding the coming 12G servers, one of the most interesting feature is R720 now supports SSD housing directly to PCI-Express slots.

Every server maker is flash-happy these days, and solid-state memory is a big component of any modern server, including the PowerEdge 12G boxes. The new servers are expected to come with Express Flash – the memory modules that plug directly into PCI-Express slots on the server without going through a controller. Depending on the server model, the 12G machines will offer two or four of these Express Flash ports, and a PCI slot will apparently be able to handle up to four of these units, according to Payne. On early tests, PowerEdge 12G machines with Express Flash were able to crank through 10.5 times more SQL database transactions per second than earlier 11G machines without flash.

Update: March 21, 2012

Seems the SSD housing directly to PCI-Express slots is going to be external and hot-swappable.

固態硬碟廠商Micron日前推出首款採PCIe介面的2.5吋固態硬碟(Solid State Disk,SSD),不同於市面上的PCIe介面SSD產品,這項新產品的最大不同之處在於,它並不是介面卡,而是可支援熱插拔(Hot- Swappable)的2.5吋固態硬碟。

近年來伺服器產品也開始搭載SSD硬碟,但傳輸介面仍以SATA或SAS為主,或是提供PCIe介面的擴充槽,讓企業可額外選購PCIe介面卡形式的 SSD,來擴充伺服器原有的儲存效能與空間。PCIe介面能提供更高效能的傳輸速率,但缺點是,PCIe擴充槽多設於伺服器的機箱內部,且不支援熱插拔功 能,企業如有擴充或更換需求,必須將伺服器停機,並掀開機箱外殼,才能更換。

目前Dell PowerEdge最新第12代伺服器產品新增了PCIe介面的2.5吋硬碟槽,而此次Micron所推出的PCIe介面2.5吋SSD,可安裝於這款伺 服器的前端,支援熱插拔功能,除保有高速傳輸效率的優點之外,也增加了企業管理上的可用性,IT人員可更輕易的擴充與更換。

Found someone (HansDeLeenheer) actually did this very interesting relationship diagram, it’s like a love triangle, it’s very innovative and informative! I just love it!

![VENDORS[1] VENDORS[1]](http://www.modelcar.hk/wp-content/uploads/2011/12/VENDORS1.jpg)

I’ve opened a case with Equallogic, but so far getting no reply yet.

As I understand Equallogic Firmware 5.1 supports Auto Load Balancing which the firmware (or software) automatically reallocate hot data to appropriate group members.

In fact, there were two great videos (Part I and Part II) about this new feature on Youtube.

As we knew the best practice before FW 5.1 is to group similar generation and spindles in the same storage pool. For example, place PS6000XV with PS6000XV, PS6000E with PS6000E, etc.

Now with FW 5.1, it is indeed possible to place whatever you want in the same pool (ie, different generation and spindles in the same pool) as Auto Load Balancing will take care the rest.

Dell called this Fluid Data Technology, actually a term borrowed from its recently acquired storage vendor Compellent.

My question is in terms of performance and updated best design practice, is it still recommended by Dell Equallogic to go with the old way? (ie, Separate storage tier with similar generation and spindle speed)

Update: Dec 12, 2011

Finally got the reply from Dell, seemed the old rule still applies.

The recommendation from Dell to use drives with the same drive speed is still the same within a pool. When you mix drives of different speeds, you slow the speed of the faster drives to the speed of the slower drives. The configuration should WORK but is not optimal and not recommended by Dell.

Then my next question is what’s the use of the new feature “Fluid Data Technology” if the old rule still applies? huh?

Update: Dec 21, 2011

Received another follow up from EQL support, this really solved my confusion now.

Load balancing is across disks, controllers, cache, and network ports. That means the storage, processing, and network ports access are balanced. Not ONLY disk speed.

Dell Equallogic customers create their own configuration. It is an option for you to add disks of different speeds to the group/pool; however, the disk spin speed will change to be the speed of the slowest drive. Most customers do not have a disk spin speed bottleneck; however, most customers are also aware of the rule-of-thumb in which they keep disks of like speeds together.

http://www.cns-service.com/equallogic/pdfs/CB121_Load-balancing.pdf

Update: Jan 18, 2011

After spending a few hours at Dell Storage Community, I found the following useful information from different person, the answer is still the same.

DO NOT MIX different RPM disks in the same pool even with the latest EQL FW v5.1 APLB feature!

Yes, the new improvements to the Performance Load Balancing in v5.1.x and the sub-volume tiering performance balancing capabilities now allow for mixed drive technologies and mixed RAID policies coexisting in the same storage pool.

In your case, you would have mixed drive technologies (the PS400E, SATA 5k and the PS4100X, SAS 10k) with each member running the same RAID policies.

When the PS4100X is added to the pool, normal volume load balancing will take the existing volumes on the two PS400E’s and balance them onto the PS4100X. Once the balancing is complete and you then remove the PS400E from the group (which is the requirement to remove 1 PS400E), the volumes slices contained on this member will be moved to the remaining two members and be balanced across both members (the PS400E SATA and PS4100X SAS) at that point.

Note, however, that Sub-volume performance load balancing may not be so noticeable until the mixed pools experience workloads that show tiering and are regular in their operating behavior. Because the operation takes place gradually, this could take weeks or EVEN MONTHS depending on your specific data usage.

Arisadmin,

Hi, I’m Joe with Dell EqualLogic. Although we support having members with mixed RAID policies in the same pool, in your case this is not advisable due to the two different drive types on your two members, i.e., your PS6000E is a SATA and your PS6000XV is a SAS. Mixing different drive types in the same pool, will most likely degrade performance in the group.

If the arrays were of the same drive type, i.e., both SATA (or both SAS), then combining the two (RAID 10 and RAID 6), would not be a problem, however the actual benefits, in your case, may not be as great as expected.

In order for load balancing to work “efficiently”, the array will analyze the disk I/O for several weeks (2-3) and determine if the patterns are sequential (RAID’s 10/5/6) or random (RAID 10), and would migrate those volumes to the corresponding member.

However in a two members group this is often less efficient, hence EQL always suggest 3 members group for load balancing.

Since the array will try to balance across both member, and you may end up with 80% of the volume on one member and 20% on the other member instead of a 50/50 split.

We also support manually assigning a raid level to the volume, but this would in effect, eliminate the load balance that you are trying to achieve, since it is only a two member group.

So in summary, we don’t recommend combining different Drive types (or Disk RPM) in the same pool.

You can go to http://www.delltechcenter.com/page/Guides and review the following documents for more information:

Deploying Pools and Tiered Storage in a PS Series SAN

PS Series Storage Arrays Choosing a member RAID PolicyRegards,

Joe

This is a very popular and frequently asked question regarding what type of disks and arrays should be used in a customers environment. First, you asked about the APLB (Automatic Performance Load Balancing) feature in EqualLogic arrays.

Yes, it will move “hot blocks” of data to faster or less used disk, but its not instantaneous. This load balancing between volumes, or better known as sub volume load balancing uses an advanced algorithm that monitors data performance over time. I recommend reading the APLB whitepaper that should help you out more in better understand how this technology works.

see here: www.virtualizationimpact.com

In terms of what disks to buy, well that comes down what you are going to require in your environment. From my experience and from reading on other forums, if you are trying to push for the best performance and capacity I would look at the X series arrays or 10K series drives. You can now get 600GB 10K drives in 2.5 and 3.5 form factors (i believe) and you won’t have to worry if your 7200 drives will be able to keep up with your workload, or at least, be faster and mix them with the 15KSAS/SSD arrays. Not saying that the 7200’s won’t work, just depends on your requirements.

Hope thats some help, maybe someone else will chime in with more info too.

Jonathan

Best solution is to work with Sales and get more specific about the environment than can easily be done via a forum. You’re entire environment should be reviewed. Depending on the IOPs requirements will determine if you can mix SAS/SATA in same pool and still achieve your goals.

One thing though it’s not a good idea to mix the XVS with other non-XVS arrays in the same pool. yes the tiering in 5.1.x firmware will move hot pages but it’s not instant and you’ll lose some of the high end performance features of the XVS.

Regards,

-don

Found this USB flash drive today at a local computer shop. It costs only USD10 for 8GB, branded and it fits neatly to my JVC woodcone Hi-Fi.

Now I can finally put more than 2,500 high quality 320kbps MP3s on to a tiny device, I really like it! ![]()

聽了一年多JVC的EX-A15﹐其專利Wood Cone喇叭的聲音很不錯﹐尤其播放人聲和管絃樂的時候﹐但總覺得始終低重音差了些﹐所以聽交響樂的時候就略嫌不足了。

Sub-Woofer這個設備完全是跟金錢成正比的﹐當然想買KEF﹐B&W甚至JBL的產品。但是要麼就是尺寸太大﹐要麼就是價錢太嚇人﹗難道真的要用兩三倍于JVC EX-A15的價錢去買台高端的Sub-Woofer來聽2.1聲道嗎﹖如果真的這麼做﹐那根本就是本末倒置﹐鬧大笑話了﹗

最近幾天出去轉了一圈﹐走遍大小影音舖﹐終于找到了個尺寸大小合適我的客廳﹐性價比又十分之高的8寸小Sub-Woofer﹐而且還有華麗的黑色鋼琴表面Coating。加上商家比較誠實有禮﹐還送了條不太差的Prolink Woofer線給我﹐最主要他分析得有道理﹐尤其是提出了上面的看法。

這是台來自加拿大Marquis MQ88﹐其實懷疑它根本就是本地薑﹐因為Marquis總部就是華人聚集的多倫多Richmond Hill。

最後這次尋找和購買的過程還經歷了各種冷嘲熱諷和受儘白眼﹐開始討厭和鄙視那些自以為是的PRO AV 銷售員了﹐很多都完全不是做生意的材料﹐老以為人家買低端的產品就是沒銀子﹐簡直就是TMD井底之蛙﹗

怎麼最近老花錢升級這樣那樣的﹐難道Xmas is around﹖哈哈。。。

Update Dec 3, 2011

無意間在論壇看到一篇很有意思的對答﹕

音樂真的不是單單講解釋力,高低伸延越高,就是越好的…要知道人類聽覺最敏感是中頻,大約從300Hz – 1000Hz…所以當有某牌子/器材在這方面較為出息,會較容易被人所接受…音響世界裏即使用上十萬,幾十萬甚至上百萬元級數器材,其實你一様都需要取捨!

不要過份沉迷於硬件方面,多花些時間去好好享受自己的音樂吧!

如果注意力過份投放於個別硬件上…的而且確會出現你所講的問題,一套較完整音響器材包含多個環節,聆聽環境(當中包含大量聲學…可能同時間既要吸音亦要擴散),其他器材配合,電源,線材調較,防振等等千變萬化…

小弟年少時已熱愛音樂,但由於家庭環境,能夠有錢買一張黑膠碟已非常開心(當時CD尚未普及),到開始踏足社會工作…一度成為一名音響發燒友,,所花金錢不下數十萬,當時互聯網尚未普及,網上評測,用家評語,二手買賣途徑少之又少…只能每月購買音響雜誌,經常到當時位於女人街旺角音響,西洋菜街新時代音響聽下,比較下…

香港人玩音響其實非常狂熱,全世界生產音響公司都非常重視香港巿場,原因真的有人會花上百萬只為玩好一對3/5A,有人於線材方面已花好幾十萬,一旦有新產品可以改善音質亦敢於嘗試,即使低燒,買幾粒RCA頭亦已過千…

從來音響市場都極少出現所謂性價比高產品,因為多數只有不同特性,不同味度(印象中只有多年前一部PS Audio Ultralink技驚四座! 如果有一定音響年資應該都會知道…),有別於電腦領域,因為你不能單純地以速度,價格去評估,你只能不停嘗試,不停組合…有人會覺麻煩,亦有人樂此不疲!

至於內地產品,實在有如天上繁星…自己小心選擇! 我自己未曾用過,未敢武斷…當中可能有不少優秀產品,但相信亦有唔少粗制濫造! 始終一般用家大多會從品牌,軟件支援,信心,甚至二手市場…等等因素去作出選擇…

回想當年, 也曾走過和師兄差不多的心路歷程…花費不少在各個硬件環節上, 為了追求一點點效果, 摩改補品不計其數。一有空就去西洋菜街…經常都聽住嗰幾隻天碟/試音碟…重複比較人聲甜唔甜, 空氣感好唔好, 打爛玻璃聲夠唔夠清脆, 1812嘅炮聲有冇失真…

兜兜轉轉, 而家用返電腦聽歌, 又自得其樂…

其實用什麼器材也好, 最終目的是聽音樂, 而不是聽效果, 所以應該用多啲時間, 聽多啲音樂, 建立自己嘅口味, 品味, 聽力同分析力, 然後跟據自己嘅需要同能力, 去選擇合適嘅器材…

喺追尋嘅過程中, 要客觀冷靜, 小心分析, 不要人云亦云, 胡亂浪費金錢…

當你完全進入發燒階段,莫講話聽到更好既音響器材和音質,就連你腦海閃過一些假設,一些想法…你都會欲罷不能!

玩音響其實大致可分為兩類:

1) 懂得利用適當器材去享受,去欣賞自己喜歡的音樂,適當地花錢,在有限聆聽環境裏利用器材間之匹配,發揮最大潛質,能夠取捨,只求一個平衡點…

2) 經常利用音樂,試音天碟去測試自己器材(情況跟樓上位師兄所講一樣),不計較價錢,會經常換機,換線材,頻頻較音…貪新棄舊,欲罷不能,永無止境!

如果你發覺有第2類徵狀,証明你中毒已深……只能嘗試抽身……

我都係過來人, 又點會唔明白箇中嘅感受呢!!

不過正正因為面對嘅誘惑越大, 就越需要保持冷靜, 越要小心考慮自己嘅真正需要… 因為每個人嘅口味同需要係可以完全唔同嘅 : —-例如有人要買Amp, 你介紹一部出名人聲靚嘅Class A比佢, 點知原來佢係用黎聽Rock嘅

我亦唔係反對摩機改機和使用補品, 但係要適可而止, 同因材施教, 避免浪費金錢, 交左學費而又學唔到野 : —-例如聽到有人話某部機點摩點改, 就會脫胎換骨, 於是就買部新機, 交比人照板煮碗, 結果係有改善, 但係問返機主, 到底邊方面改善左, 佢完全唔識答, 因為部機本身嘅聲底, 每樣補品嘅特性同效果, 佢完全唔知… 呢種食快餐式嘅玩法完全忽略左過程之中嘅領略同趣味, 錢花費了但係又一無所知…可能呢部機嘅聲底跟本就唔啱佢嘅口味, 另外一部可能更加合適…又或者只要摩其中一樣野, 已經可以滿足機主嘅需要…

所以要清楚知道自己嘅真正需要, 然後用最小嘅支出, 得到最大嘅滿足…

要知道”滿足感”係相對而唔係絕對嘅, 例如有人用一千蚊買對電腦喇叭, 小摩後比得上一部六千蚊嘅迷你HiFi, 佢覺得非常滿足, 開開心心去聽音樂…

相反, 有人用兩萬蚊買左套HiFi, 但覺得比部六千蚊嘅迷你HiFi只係好少少, 非常煩惱, 終日攪來攪去, 左試右試, 冇乜時間去聽音樂…

你會係邊一個呢??

事實如此…早年多前有朋友要求我幫忙一同選購一套2聲道音響器材,其中有一部大約10K左右英國制5,60W合拼(原子粒),如果你只以外觀加上內裏平凡用料,非常簡單線路…你難以想像/解釋為何有如此美妙聲音,當時配搭一對ProAc 1SC開聲….

電子零件選配雖然是當中重要環節…但其實更重要是線路設計,生產者/設計人員對音樂認知,理念…如果單純選用高級,名貴零件,就能有好聲音…相信全世界生產音響公司都能夠生產出Hi End級數器材!

I will soon purchase a SSD for testing ESX performance on Poweredge R710 (H700 512MB raid card), After researching a bit on Storage Review and AnandTech, I narrowed down to two most reliable branded SSD.

Crucial M4 128GB 2.5″ SATA 3 6Gb/s SSD (FIRMWARE 009)

Intel 320 Series 120GB (Retail Box w/External Case) G3 Postville SSDSA2CW120G3B5 2.5″ SATA SSD

![m4-ssd[1] m4-ssd[1]](http://www.modelcar.hk/wp-content/uploads/2011/12/m4-ssd1.jpg)

1. Intel has two more years of warranty (5 years) vs Crucial has 3 years.

Locally Intel is warranted by聯強 (Synnex), Crucial is warranted by建達 (Xander), I had good experience with both.

2. Intel Retail box comes with USB 3 connector and I really like this! As after the testing, I can still use it with my desktop USB 3.0.

3. Crucial M4 is much faster in terms of sequential read/write, its random IOPS is extremely impressive according various benchmarking and random IOPS is what SSD all about for VM.

http://www.anandtech.com/print/4256

http://www.anandtech.com/print/4421

4. Intel SSD offers a free nice GUI “Intel SSD Toolbox”, it comes with nicer feature too, like update firmware and erase SSD to have faster speed like new, M4 got nothing to compare in this area.

5. Intel has Power Lost Data Protection, M4 got nothing. However, Intel 320 had a dark period three month ago that losing power of SSD will render the SSD to 8MB bug, may be it’s just a gimmick thing from Intel like always.

6. Intel 320 is still SATA2, 3Gbps, Curcial M4 is SATA3, 6Gbps

7. Both Intel 320 and Crucial M4 uses 25nm NAND.

8. Also found out the latest Crucial M4 is the SAME as Crucial C400 (just a different name) and it’s the newer generation of its famous Crucial C300.

I don’t like OCZ, as I read so many negative reports about their firmware problem previously.

Seemed Crucial M4 120GB SSD is the right one for me, it’s 25nm, SATA3, 6Gbps, 2-3 times faster than Intel 320 120GB SSD in terms of random 4k IOPS.

I have decided go for M4 and I can live up by giving up Intel SSD Toolbox feature and USB 3.0 cable, well the compatible SSD USB 3.0 should be very easy to find for M4.

All I want is the huge IOPS, seemed a Single Crucial M4 advertised as 40,000 IOPS for 4k is equivalent to 10 Equallogic PS6000XV boxes with 16 x 15KRPM disks each. That’s 160 15K RPM spindle compares to 1 SSD, absolutely ridiculous! Not to mention the cost is 1,000 times LESS, OMG!!! I CAN’T BELIEVE MY CALCULATION!!!.

That’s in theory, I’ve read the actualy M4 IOPS for 4k reallife 60%random65%read is about 8,000, so equivalent to 2 PS6000XV with 16 x 15KRPM disks each, still that’s amazing! 1 SSD kills 32 enterprise 15K RPM SAS in terms of IOPS. In additional, the actualy sustained IOPS over a long period still proves 15K RPM SAS is better than SSD, the extremely high number can only sustain for the first few minutes and then it dropped rapidly to 1/10 over a period of serveral hours, so this means if you have a heavy OLTP or transactional based application runs 24/7, 15K RPM SAS is still the only choice.

So who cares about that extra 2 more years of warranties from Intel, if I am looking for reliability later, all I need is to purchase another unit and make it a RAID1.

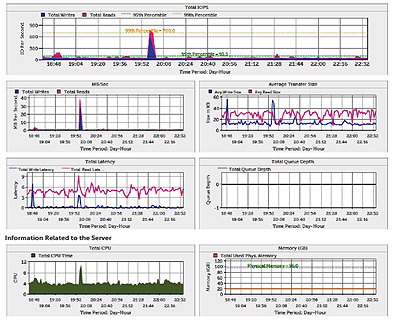

During the Equallogic Seminar yesterday, Dell’s storage consultant introduced DPACK (Dell Performance Analysis Collection Kit) to us.

Basically it’s a Monitoring and Reporting utility that will run over time (4-24 hours) to collect data (such as CPU, Memory, IOPS, network traffic etc) from your physical or virtual servers.

The best thing is the footprint of this program is very small, about 1.5MB, still remember that crappy Dell Management Console 2.0?

The best part is you don’t need to install it at all, simply run it, key in the server or vcenter parameters, select a time range, run it, wait for a few hours, that’s it.

The output is a single file with extension .iokit, then you are supposed to use the online generator to get the DPACK result in PDF. (http://go.us.dell.com/DPACK). However, the site is currently not working as it’s still not ready according to DPACK team.

So what to do? DPACK team is kind enough, I sent the result.iokit to them and Dell helped me to generate the PDF manually.

The Overview of DPACK and Download DPACK.

Finally, I would say it’s a lite version of Veeam Monitor and Veeam Monitor does a lot more and it’s also free. ![]()

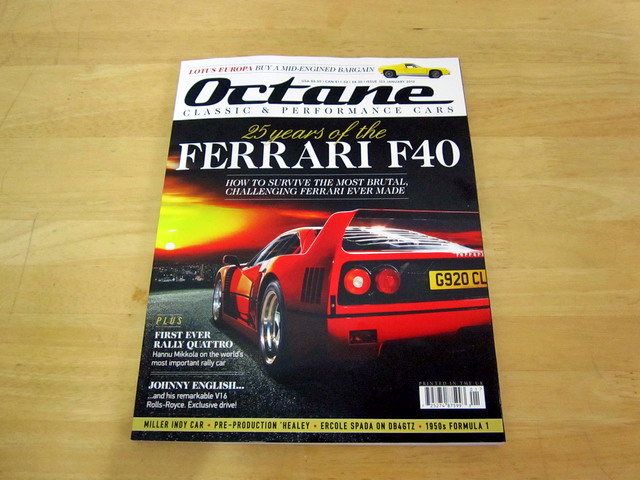

之前還以為至少下星期才能從英國運到香港﹐想不到今天Octane最新的一期竟然已經出現在書店的雜誌架上﹐幾乎和英國同步上架﹐真不錯﹗

我作為一個標準的F40狂迷﹐這期當然不容錯過了。

另外最近終于等到了本地實體店售賣京商F40黑色的輕量升級版﹐紅色的椅子﹐真得很好看﹐而且還有大特價﹐但最後還是放棄了。

原因是這黑色的漆面實在不敢恭維﹐才出了那麼幾個星期﹐已經長出了小泡泡﹐這可是價格過千的車模啊﹐京商怎麼就這麼不注重品質呢﹖太令人費解了﹐難道真的是次貨全留在了亞洲區不成﹖

Despite multiple day, Veeam has finally launched it’s flagship product Veeam Backup & Replication version 6.

One of the most useful features I found is “One Click Restore”, because most of the time we do not have the root password of our client’s servers and often clients ask for mistakenly deleted files, so this enhancement is definitely the key reason for anyone to upgrade to v6.

Besides new improved replication is now 10x faster (see this appreciation post), together with throttling bandwidth capping, finally, we can replicate smoothly over our 100Mbps link between production and DR sites.

Last but not least, the scalability of backup agents (server in Veeam’s term), Veeam B&R 6.0 now utilizes proxy concept, so you have a group of backup agent/proxy or bots that will evenly distribute the loading among them. What’s best, you no longer have to login to each backup server (or agent) and you can manage all of your backup jobs on all proxies from a single panel, isn’t this just beautiful! ![]()

What about v6 now supports Hyper-V? Dohhhh…Hper-V in my own opinion is still not a mature product and it’s fine for lab use, for enterprise? No Way!

After losing a lot of sleep over the weekend working out the kinks and fixing my mistakes, v6 is running great and I can’t believe the speed increase!

Just a few examples:

- Replicating a file server with 750GB of data, down to 25 minutes total (replicating the data disk only took 6:30)!

- Replication job on my Exchange server w/ 400GB of mailbox data… 20 minutes

- Backup job with 6 VMs ranging from 50-120GB, 35 minutes

- Replication of a 60GB VM running Accounting software over a 20Mbps WAN… wait for it… 4 1/2 minutes!

The kicker here is I’m running 3-4 jobs in parallel now. My backup window has gone from 6+ hours to around 2 hours for nearly 3TB of VM data and I’m finally saturating my disk writes on the backup target. There are, of course, a few minor bugs, but I’m impressed with the quality for a first release after such major architecture changes.