Equallogic PS6000XV using VMWare Unofficial Storage Performance IOMeter parameters

Unofficial Storage Performance Part 1 & Part 2 on VMTN

Here is my result from the newly setup EQL PS6000XV, I noticed the OEM harddisk is Seagate Cheetah 15K.7 (6Gbps) even PS6000XV is a 3Gbps array. (I thought they will ship me Seagate Cheetah 15K.6 3Gbps originally), also the firmware is updated to EN01 instead the shown EN00.

I’ve also spent 1/2 day today to conduct the test on different generation servers both local storage, DAS and SAN.

The result is pretty making sense and reasonable if you look deep into it.

That’s is RAID10 > RAID5, SAN > DAS >= Local and EQL PS6000XV Rocks despite warning saying all 4 links being 99.9% saturated during the sequential tests. (I increased the workers to 5, that’s why, it’s not in the result but in a seperate test for Max Throughput-100%Read)

++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

TABLE oF RESULTS

++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

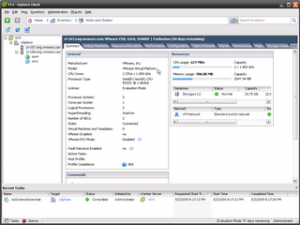

SERVER TYPE: VM on ESX 4.1 with EQL MEM Plugin

CPU TYPE / NUMBER: vCPU / 1

HOST TYPE: Dell PER710, 96GB RAM; 2 x XEON 5650, 2,66 GHz, 12 Cores Total

STORAGE TYPE / DISK NUMBER / RAID LEVEL: Equallogic PS6000XV x 1 (15K), / 14+2 600GB 15K Disks (Seagate Cheetah 15K.7) / RAID10 / 500GB Volume, 1MB Block Size

SAN TYPE / HBAs : ESX Software iSCSI, Broadcom 5709C TOE+iSCSI Offload NIC

##################################################################################

TEST NAME——————-Av. Resp. Time ms——Av. IOs/sek——-Av. MB/sek——

##################################################################################

Max Throughput-100%Read……..5.4673……….10223.32………319.48

RealLife-60%Rand-65%Read……15.2581……….3614.63………28.24

Max Throughput-50%Read……….6.4908……….4431.42………138.48

Random-8k-70%Read……………..15.6961……….3510.34………27.42

EXCEPTIONS: CPU Util. 83.56, 47.25, 88.56, 44.21%;

##################################################################################

SERVER TYPE: Physical

CPU TYPE / NUMBER: CPU / 1

HOST TYPE: Dell PER610, 12GB RAM; E6520, 2.4 GHz, 4 Cores Total

STORAGE TYPE / DISK NUMBER / RAID LEVEL: Equallogic PS6000XV x 1 (15K), / 14+2 600GB 15K Disks (Seagate Cheetah 15K.7) / RAID10 / 500GB Volume, 1MB Block Size

SAN TYPE / HBAs : Broadcom 5709C NICs with 2 paths only (ie, 2 physical NICs to SAN)

Worker: Using 2 Workers to push PS6000XV to it’s IOPS peak!

##################################################################################

TEST NAME——————-Av. Resp. Time ms——Av. IOs/sek——-Av. MB/sek——

##################################################################################

Max Throughput-100%Read……..14.3121……….6639.48………207.48

RealLife-60%Rand-65%Read……12.8788……….7197.69………150.51

Max Throughput-50%Read……….11.3125……….6837.76………213.68

Random-8k-70%Read……………..13.7343……….6739.38………142.22

EXCEPTIONS: CPU Util. 25.99, 24.10, 28.22, 25.36%;

##################################################################################

SERVER TYPE: Physical

CPU TYPE / NUMBER: CPU / 1

HOST TYPE: Dell PER610, 12GB RAM; E6520, 2.4 GHz, 4 Cores Total

STORAGE TYPE / DISK NUMBER / RAID LEVEL: Equallogic PS6000XV x 1 (15K), / 14+2 600GB 15K Disks (Seagate Cheetah 15K.7) / RAID10 / 500GB Volume, 1MB Block Size

SAN TYPE / HBAs : Broadcom 5709C NICs with 2 paths only (ie, 2 physical NICs to SAN)

##################################################################################

TEST NAME——————-Av. Resp. Time ms——Av. IOs/sek——-Av. MB/sek——

##################################################################################

Max Throughput-100%Read……8.7584………5505.30………172.04

RealLife-60%Rand-65%Read……12.5239………4032.84………31.51

Max Throughput-50%Read………6.8786………6455.76………201.74

Random-8k-70%Read……………14.96………3435.59………26.84

EXCEPTIONS: CPU Util. 19.37, 10.33, 18.28, 9.78%;

##################################################################################

SERVER TYPE: Physical

CPU TYPE / NUMBER: CPU / 1

HOST TYPE: Dell PER610, 12GB RAM; E6520, 2.4 GHz, 4 Cores Total

STORAGE TYPE / DISK NUMBER / RAID LEVEL: Local Storage, PERC H700 (LSI), 512MB Cache with BBU, 4 x 300 GB 10K SAS/ RAID5 / 450GB Volume

SAN TYPE / HBAs : Broadcom 5709C NIC

##################################################################################

TEST NAME——————-Av. Resp. Time ms——Av. IOs/sek——-Av. MB/sek——

##################################################################################

Max Throughput-100%Read……..2.7207……….22076.17………689.88

RealLife-60%Rand-65%Read……50.4486……….906.69………7.08

Max Throughput-50%Read……….2.5429……….22993.78………718.56

Random-8k-70%Read……………..55.1896……….841.89………6.58

EXCEPTIONS: CPU Util. 6.32, 6.94, 5.95, 6.98%;

##################################################################################

SERVER TYPE: Physical

CPU TYPE / NUMBER: CPU / 2

HOST TYPE: Dell PE2450, 2GB RAM; 2 x PIII-S, 1,26 GHz, 2 Cores Total

STORAGE TYPE / DISK NUMBER / RAID LEVEL: Local Storage, PERC3/Si (Adaptec), 64MB Cache, 3 x 36GB 10K U320 SCSI / RAID5 / 50GB Volume

SAN TYPE / HBAs : Intel Pro 100 NIC

##################################################################################

TEST NAME——————-Av. Resp. Time ms——Av. IOs/sek——-Av. MB/sek——

##################################################################################

Max Throughput-100%Read……..44.1448……….1326.03………41.44

RealLife-60%Rand-65%Read……93.1499……….456.88………3.57

Max Throughput-50%Read……….143.9756……….269.51………8.42

Random-8k-70%Read……………..80.27……….502.63………3.93

EXCEPTIONS: CPU Util. 23.33, 13.23, 11.65, 12.51%;

##################################################################################

SERVER TYPE: Physical

CPU TYPE / NUMBER: CPU / 2

HOST TYPE: DIY, 3GB RAM; 2 x PIII-S, 1,26 GHz, 2 Cores Total

STORAGE TYPE / DISK NUMBER / RAID LEVEL: Local Storage, LSI Megaraid 4D (LSI), 128MB Cache, 4 x 300GB 7.2K SATA / RAID5 / 900GB Volume

SAN TYPE / HBAs : Intel Pro 1000 NIC

##################################################################################

TEST NAME——————-Av. Resp. Time ms——Av. IOs/sek——-Av. MB/sek——

##################################################################################

Max Throughput-100%Read……..15.1582……….3882.81………121.34

RealLife-60%Rand-65%Read……60.2697……….499.05………3.90

Max Throughput-50%Read……….2.8170……….2337.38………73.04

Random-8k-70%Read……………..152.8725……….244.40………19.1

EXCEPTIONS: CPU Util. 16.84, 18.79, 15.20, 17.47%;

##################################################################################

SERVER TYPE: Physical

CPU TYPE / NUMBER: CPU / 2

HOST TYPE: Dell PE2650, 4GB RAM; 2 x Xeon, 2.8 GHz, 2 Cores Total

STORAGE TYPE / DISK NUMBER / RAID LEVEL: Local Storage, PREC3/Di (Adaptec), 128MB Cache, 5 x 36 GB 10K U320 SCSI / RAID5 / 90GB Volume

SAN TYPE / HBAs : Broadcom 1000 NIC

##################################################################################

TEST NAME——————-Av. Resp. Time ms——Av. IOs/sek——-Av. MB/sek——

##################################################################################

Max Throughput-100%Read……..33.9384……….1743.55………54.49

RealLife-60%Rand-65%Read……111.2496……….310.62………2.43

Max Throughput-50%Read……….55.7005……….518.47………16.20

Random-8k-70%Read……………..122.5364……….317.95………2.48

EXCEPTIONS: CPU Util. 7.66, 6.97, 7.78, 9.27%;

##################################################################################

SERVER TYPE: Physical

CPU TYPE / NUMBER: CPU / 2

HOST TYPE: DIY, 3GB RAM; 2 x PIII-S, 1,26 GHz, 2 Cores Total

STORAGE TYPE / DISK NUMBER / RAID LEVEL: Local Storage (DAS), PowerVault 210S with LSI Megaraid 1600 Elite (LSI), 128MB Cache with BBU, 12 x 73GB 10K U320 SCSI Splite into two Channels 6 Disks each/ RAID5 / 300GB Volume x 2, fully ultilize Raid Card’s TWO U160 Interfaces.

SAN TYPE / HBAs : Intel Pro 1000 NIC

##################################################################################

TEST NAME——————-Av. Resp. Time ms——Av. IOs/sek——-Av. MB/sek——

##################################################################################

Max Throughput-100%Read……..28.9380……….3975.19………124.22

RealLife-60%Rand-65%Read……30.2154……….2913.15………84.17

Max Throughput-50%Read……….31.0721……….3107.95………97.12

Random-8k-70%Read……………..33.0845……….2750.71………78.00

EXCEPTIONS: CPU Util. 23.91, 22.02, 26.01, 20.24%;

##################################################################################

SERVER TYPE: Physical

CPU TYPE / NUMBER: CPU / 2

HOST TYPE: DIY, 4GB RAM; 2 x Opeteron 285, 2.4GHz, 4 Cores Total

STORAGE TYPE / DISK NUMBER / RAID LEVEL: Local Storage, Areca ARC-1210, 128MB Cache with BBU, 4 x 73GB 10K WD Raptor SATA / RAID 5 / 200GB Volume

SAN TYPE / HBAs : Broadcom 1000 NIC

##################################################################################

TEST NAME——————-Av. Resp. Time ms——Av. IOs/sek——-Av. MB/sek——

##################################################################################

Max Throughput-100%Read……..0.2175……….10932.45………341.64

RealLife-60%Rand-65%Read……88.3245……….393.66………3.08

Max Throughput-50%Read……….0.2622……….9505.30………296.95

Random-8k-70%Read……………..109.6747……….336.66………2.63

EXCEPTIONS: CPU Util. 14.11, 7.04, 13.23, 7.80%;

##################################################################################

SERVER TYPE: Physical

CPU TYPE / NUMBER: CPU / 2

HOST TYPE: Tyan, 8GB RAM; 2 x Opeteron 285, 2.4GHz, 4 Cores Total

STORAGE TYPE / DISK NUMBER / RAID LEVEL: Local Storage, LSI Megaraid 320-2X, 256MB Cache with BBU, 4 x 36GB 15K U320 SCSI / RAID 5 / 90GB Volume

SAN TYPE / HBAs : Broadcom 1000 NIC

##################################################################################

TEST NAME——————-Av. Resp. Time ms——Av. IOs/sek——-Av. MB/sek——

##################################################################################

Max Throughput-100%Read……..0.4261……….7111.26………222.23

RealLife-60%Rand-65%Read……30.1981……….498.56………3.90

Max Throughput-50%Read……….0.5457……….5974.71………186.71

Random-8k-70%Read……………..42.7504……….496.88………3.88

EXCEPTIONS: CPU Util. 29.71, 24.51, 27.74, 32.93%;

##################################################################################

1. Use Veeam’s great free tool FastSCP to upload the original.vmdk file (nothing else, just that single vmdk file is enough, not even vmx or any other associated files) to /vmfs/san/import/original.vmdk. In case you didn’t know, Veeam FastSCP is way faster than the old WinSCP or accessing VMFS from VI Client.

1. Use Veeam’s great free tool FastSCP to upload the original.vmdk file (nothing else, just that single vmdk file is enough, not even vmx or any other associated files) to /vmfs/san/import/original.vmdk. In case you didn’t know, Veeam FastSCP is way faster than the old WinSCP or accessing VMFS from VI Client.

As we found the EQL I/O testing performance is low, only 1 path activated under 2 paths MPIO and disk latency is particular high during write for the newly configured array.

As we found the EQL I/O testing performance is low, only 1 path activated under 2 paths MPIO and disk latency is particular high during write for the newly configured array.